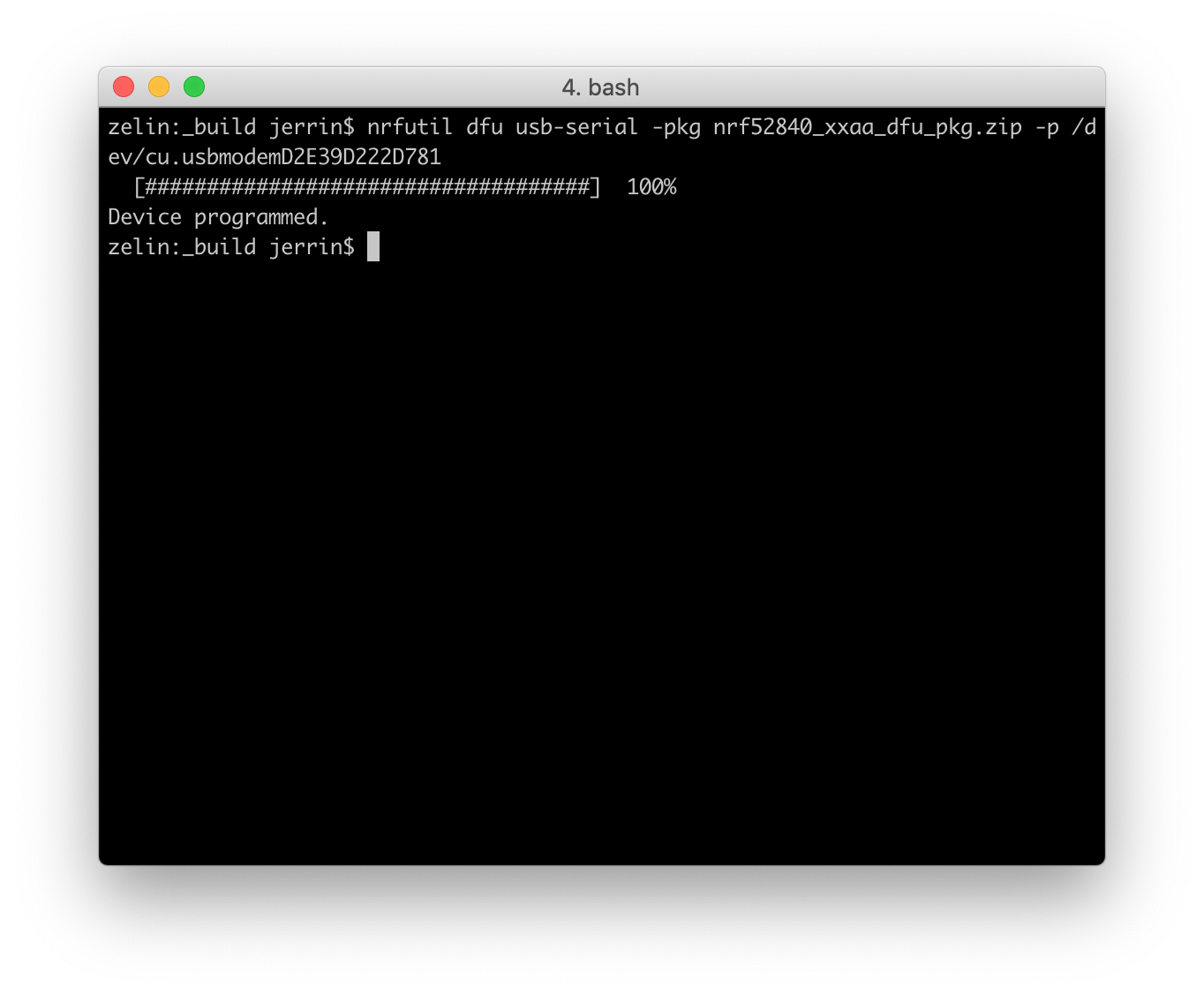

layer, 38 ipu_id = 1 ) 39 40 # Now all layers before layer are on IPU 1 and this layer onward is on IPU 2 41 model. 33 print ( model ) 34 35 # All layers before "" will be on IPU 0 and all layers from 36 # "" onwards (inclusive) will be on IPU 1. wrapped, attr ) 28 29 30 model = WrappedModel () 31 32 # A handy way of seeing the names of all the layers in the network. _getattr_ ( self, attr ) 26 except AttributeError : 27 return getattr ( self. forward ( input_ids, 19 attention_mask, 20 token_type_ids, 21 return_dict = False ) 22 23 def _getattr_ ( self, attr ): 24 try : 25 return torch. from_pretrained ( 15 pretrained_weights ) 16 17 def forward ( self, input_ids, attention_mask, token_type_ids ): 18 return self.

Module ): 12 def _init_ ( self ): 13 super (). 6 pretrained_weights = 'mrm8488/bert-medium-finetuned-squadv2' 7 8 9 # For later versions of transformers, we need to wrap the model and set 10 # return_dict to False 11 class WrappedModel ( torch. See the packaged BERT example for actual usage. This option is not enabled by default to prevent unintentional overbooking ofġ import transformers 2 import torch 3 import poptorch 4 5 # A bert model from hugging face. However, you can also enable autoRoundNumIPUs() toĪutomatically round up the number of IPUs reserved to a power of 2, with the We first introduce the general functions that are relevant to all fourįinally, we explain the four strategies with examples.īy default, PopTorch will not let you run the model if the number of IPUs isįor this reason, it is preferable to annotate the model so that the number of The default execution strategy is PipelinedExecution. There are four kinds of execution strategies that you can use to run a model on a In some corner cases, a longer pipeline can lead to faster throughput. However, You may need to experiment to find the optimal pipeline length. In general, we advise pipelining over as few IPUs as possible. inferenceModel ( model, opts ) 44 out = inference_model ( t1 ) 45 46 assert out. Options () 42 43 inference_model = poptorch. Without doing so, 35 # the Linear parameters will automatically be cast to half, which allows 36 # training with float32 parameters but half tensors. Linear ( 1, 10 ) 33 34 # Convert the parameters (weights) to halfs. 30 assert any ( top_five_classes = native_top_five_classes ) 31 # inference_half_start 32 model = torch. softmax ( native_out, 1 ), 5 ) 27 28 # Models should be very close to native output although some operations are 29 # numerically different and floating point differences can accumulate. softmax ( out_tensor, 1 ), 5 ) 21 print ( top_five_classes ) 22 23 # Try the same on native PyTorch 24 native_out = model ( picture_of_a_cat_here ) 25 26 native_top_five_classes = torch. 17 out_tensor = inference_model ( picture_of_a_cat_here ) 18 19 # Get the top 5 ImageNet classes. inferenceModel ( model ) 15 16 # Execute on IPU. train ( False ) 12 13 # Wrap in the PopTorch inference wrapper 14 inference_model = poptorch. mobilenet_v2 ( pretrained = True ) 11 model. randn () 7 8 # The model, in this case a MobileNet model with pretrained weights that comes 9 # canned with Pytorch. assert_close ( native_out, poptorch_out, rtol = 1e-04, atol = 1e-04 )ġ import torch 2 import torchvision 3 import poptorch 4 5 # Some dummy imagenet sized input. eval () 42 native_out = model ( input ) 43 44 # Models should be very close to native output although some operations are 45 # numerically different and floating point differences can accumulate. copyWeightsToHost () 39 40 # Execute the trained weights on host. 34 poptorch_out, loss = poptorch_model ( input, target ) 35 print ( f " " ) 36 37 # Copy the trained weights from the IPU back into the host model. 33 # Model input and loss function input are provided together.

30 for i in range ( 0, 800 ): 31 # Each call here executes the forward pass, loss calculation, and backward 32 # pass in one step. trainingModel ( model ) 23 24 # Some dummy inputs. manual_seed ( 0 ) 19 model = ExampleModelWithLoss () 20 21 # Wrap the model in our PopTorch annotation wrapper. loss ( fc, target ) 15 return fc 16 17 18 torch. MSELoss () 10 11 def forward ( self, x, target = None ): 12 fc = self.

Module ): 6 def _init_ ( self ): 7 super ().

1 import torch 2 import poptorch 3 4 5 class ExampleModelWithLoss ( torch.

0 kommentar(er)

0 kommentar(er)